Research Ops: A Generative study to improve the state of research company wide

- background & Challenge -

The COVID-19 pandemic started around late March 2020. There was a lot of uncertainty for how long it would last. This project originally came out of an ask for remote testing tools by my manager back in the early summer of 2020. My colleague and I were able to do the earlier task, but I was frustrated. The state of research at the company, (participant sourcing, the perception and actually conducting it) was seen as a low priority and very difficult to do. (According to Chris Avore from NASDAQ Design, our UX maturity at the company is limited sits at the Early stage. Link: https://bit.ly/3ts3Q8r ). No single tool was going to fix the larger problems we faced with research at the company and I wanted to dig deeper. My colleague and I discussed the idea with our manager and identified a couple of areas we wanted to cover. We want to better understand the current barriers and processes for user research within the organization in order to improve participant sourcing, increasing the amount of research conducted and improving the perception of research within the company.

- define -

Research Question:

What are the barriers to the user research process and how do these barriers impact the company?

Research Goals:

· Identify the biggest constraints and barriers to research efforts for team members, stakeholders and customers.

· Uncover how issues with research efforts affect the company.

· Ascertain potential solutions that would enable us to cost-effectively seize those opportunities.

- research participants -

Since research touches different departments in the company, we wanted to get input from a wide and diverse range of co-workers; we ended up conducting twenty stakeholder interviews with people who covered seventeen different products. Here is the breakdown by department:

· Design: 3

· PM: 5

· TPM: 2

· Customer Service: 5

· Development: 3

· QA: 2

The interview sessions were 30 minutes and were done remotely using Microsoft Teams.

- methodology -

Since this was a generative research effort, we needed to understand the why behind the problems. My colleague and I brainstormed on the approach; we wanted to do a mixed methods study to have a more comprehensive overview of the issues. Structure wise, we decided to split it up the research into two areas; our findings with recommended solutions and a case study highlighting a past research project that went well and how it saved the company time and money. The case study was a way to persuade stakeholders of the ROI of research and it’s benefits to the company. At the end of the discussion, I drafted up a study plan for how to move forward. The methods below enabled us to investigate and explore the issues from both the qualitative and quantitative sides:

· Desk Research (front stage and back stage actions): We used this method to investigate if any work was done in the past to explore research issues. Not only did we want to learn what was done inside the company but outside as well. We also wanted to learn the sentiment and experiences from the customer’s point of view. I contacted Customer Service with my request and received an excel sheet full stories and quotes from customers who had participated in past research efforts with the company.

· Desk Research (academic sources): Additionally, we also did desk research as well by studying four industry publications from experts on the ROI of effective research. This method was chosen to provide validity to the field of research and how transformative it could be for companies.

· Stakeholder interviews: We used this method to learn from past research efforts what were the challenges and constraints of the projects. We ended up conducting twenty stakeholder interviews with people who covered seventeen different products to get a diverse range of candidates. The interview sessions were 30 minutes and were done remotely using Microsoft Teams. My colleague and I switched off on who moderated and who observed during the sessions. The word “interview” can tend to cause some people anxiety, especially for certain participants we reached out too. I was able to solve this issue by relabeling it as a “retro”. This was a term our co-workers (outside of Design) knew and understood. We were able to sign up more participants after that change. The questions were also modeled after a retro to gain a good sense of what the participant experienced.

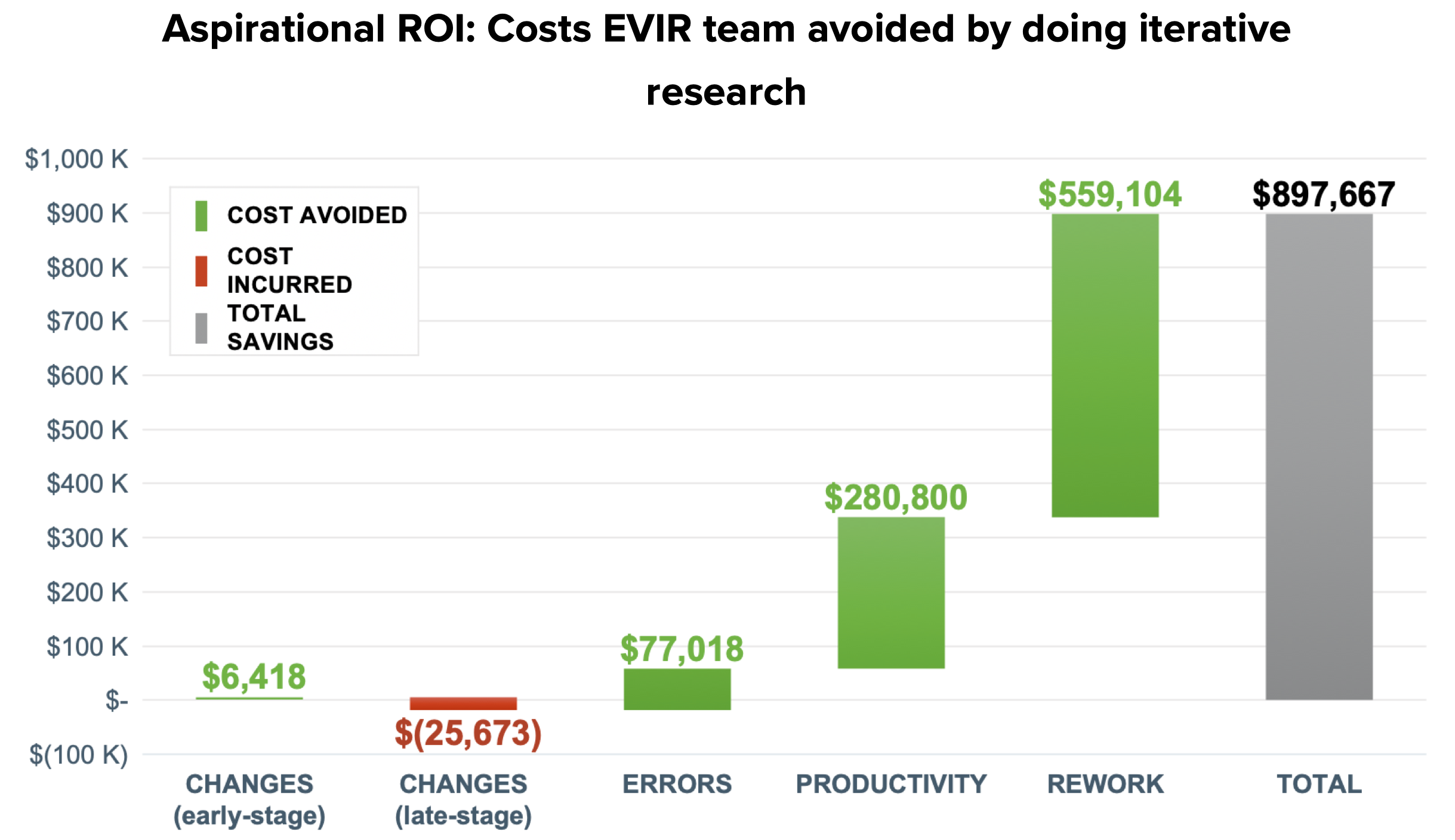

· Descriptive, Diagnostic & Predictive Statistics: We used this method in two different areas, quantifying the research findings and also ROI projections on how research saved time and money for the case study.

· Concept Testing: We partnered with the GTC Maps team to do a concept test for the participant sourcing side of the framework to gather participants for one of their upcoming studies. More details on the test will be provided later on in the case study.

- analysis -

Since the effort was so big, it ran for a month and we both split up the work here too:

· My tasks: Affinity mapping, insights analysis, ROI metrics & Research case study

· My colleague’s tasks: Journey Map for the internal departments, Roadmap and stakeholder participation chart.

For the qualitative side of the research that I covered, I started by doing affinity mapping and pulling insights from the findings. I like doing affinity mapping because it gives you a zoomed-out view of the qualitative data and it’s easier to detect patterns. Microsoft Teams creates transcripts of the interviews. I gathered up all of the transcripts and started tagging different areas (issues, motivations, trends, goals, tasks, quotes, emotions, facts, needs) with different colors. Once I completed tagging, I grabbed all of the tags and copied them into a Miro board for affinity mapping. I first divided out a section for each question to see if any patterns were forming in the tags. I then began to see overarching themes develop and notated them at the top. (I actually went through a couple of mapping iterations for refinement). From the themes I try to pull out the deeper context for insights. After I completed my side of the analysis, I synced with my colleague to discuss the results. The findings from this final mapping was the foundation for the artifacts created afterwards.

For the quantitative side of the research I covered, I gathered suggestions from our stakeholders for a good example when a research project when well. Once I had my example (a previous EVIR beta), I used industry standard formulas to calculate how much time and money were saved because that team conducted research before and throughout the beta. From the affinity mapping findings, I also was able to quantify statistics for the insights as well.

After we created power point deck, we sought feedback from stakeholders. We quickly realized that we needed to modify our research presentation for the different audiences and couldn’t rely on a single deck to evangelize this new idea. This resulted in numerous deck customizations and refinement sessions with stakeholders. Once we had our polished decks, we pitched our ideas for buy in across six departments over a two-month period. At the end, we also presented to senior leadership and the VP of Product as well.

- Learnings -

Overall Findings

Insights

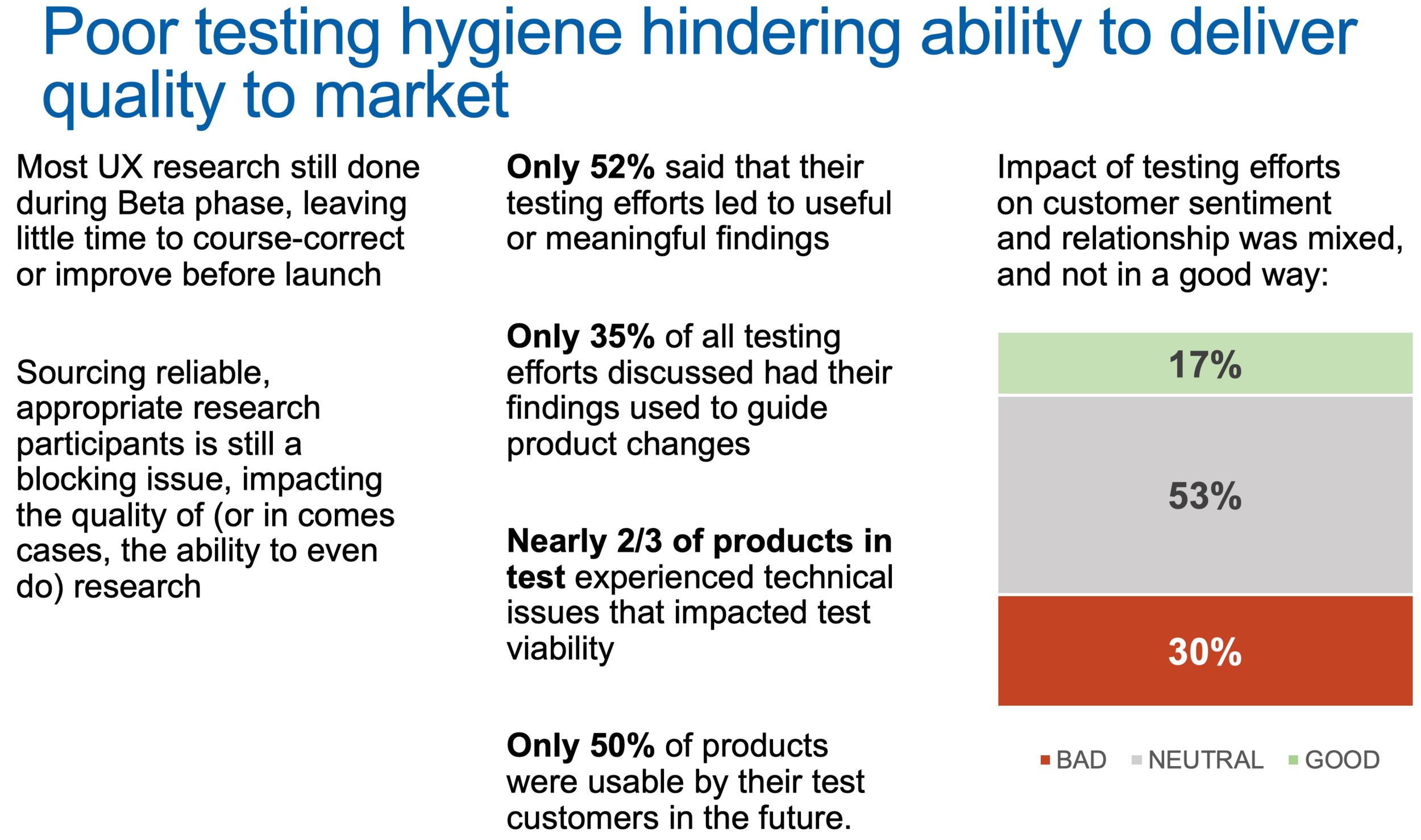

Strategic Insight: Lack of discovery research endangers the direction of future work and keeping a pulse on customer needs. Lack of a SSOT for research does not help the perception of research’s value as a strategic asset to the company. Customers feel like they do not get their ROI when they do not hear back on past studies or the product doesn’t improve and are more likely to stop using our services.

Stakeholder Insight: Lack of insight in to future technical issues causes rework and delays speed to market timelines for features and new products.

Product Insight: A lack of obtaining quality and a statistically significant amount of research participants hampers research efforts to uncover issues and hinders product development.

Based on the insights, we need a ResearchOps framework to facilitate research. What does our framework need in order for research to be successful?

• Improve how we source research participants to reduce both internal stakeholder time/effort and customer test-fatigue.

• Define a short-list of right-sized, sprint-friendly, and easily repeatable research activities for the most common learning goals/themes.

• Operationalize how findings are collected and communicated, to maximize visibility and their influence on a product’s direction.

• Leverage existing tools and tactics to further improve efficiency and effectiveness of research activities by an estimated 30+%.

• Incorporate a social component.

IMpact & Results

After pitching our idea to the department head, Senior Leadership and the Vice President of Product, we were awarded $15,000 to start the ResearchOps program. We were also able to “test run” the beginning of our framework (customer sourcing) with a usability testing session that was needed for GTC Maps. Originally, the process took months to get a list of candidates from CX and sign up participants, we were able to get it down to a couple of hours. More refinement on this process will be detailed in another project.

- next steps & reflections -

Next Steps

Finishing on building out the last phase of the framework.

reflections

o During the stakeholder interviews, some of the participants were reluctant to be honest - they were scared some of the content would reach back to their managers. I was able to put their minds at ease and reminded them that our sessions were confidential and we always anonymized names for internal co-workers. This experience taught me that trust between an interviewer and interviewee is very important, especially when dealing with sensitive data. The interview needs to be a safe place for the interviewee to speak.

o Enact a “certificate” program similiar to Jen Cardello’s program to make sure co-workers are educated in democratized UX research activities.

o The first phase involved my colleague and I working with Customer Service to build out a shared participant bank for research. What we learned was that they had no standardized system for sourcing participants. I realized during the stakeholder interviews we never asked them how they sourced participants, just their past experiences with research studies. This discovery resulted in more preliminary work in devising a system to work with Customer Service.