Beta testing for Coach’s new iOS iteration and connected equipment

- background & Challenges -

The Coach app was only available on the Android side when it first came out. The next iteration was for the iOS side along with a couple of other changes: a driver self-set up on their own mobile phone and a new camera system which included an improved outward facing camera and a secondary inward facing camera. The beta was looking at a couple of different areas.

o Onboarding process- easy of use, driver and asset association success

o Driver facing data points/night video

o Interior camera

We wanted to get user feedback to see if this version was ready for release to the general public.

- define -

Research Question: How will the introduction of the new flows and cameras affect their set up and driving experience?

Research Goal: Evaluate driver interaction with the latest iteration of Coach and it’s connected hardware.

Assumptions:

o The drivers will not love the cameras: In the past, drivers have not been accepting of the idea of their employers using interior facing cameras. Some of the reasons they drive is for the freedom aspects the profession provides from a traditional office. They have described the action as being invasive to their privacy while working.

o Reluctance from drivers utilizing personal cell phones: From past research, some drivers have expressed reservations about using their own phones for work purposes. They have expressed reasons such as extra fees added on to their plans, extra monitoring from their employers and union issues.

- participant recruitment -

Unfortunately, Design was not involved with the selection of the companies who would take part in the research. (This ended up causing issues later on). PM and Customer Service were the only departments communicating with possible companies early on. The criteria were as follows:

- Role: Drivers and Dispatch who have used the previous version of Coach (Android side)

- Vertical: Utilities, Construction, Motor Coach, local delivery, PAX (passenger buses like city or school buses)

- Number participants: 15

- Incentives: We originally chose not to tell the participants they would be receiving an incentive until after they finished up their final interview. The incentive was $25 per participant and was distributed via a tool called Tremendous.

The Customer Service Manager and PM did end up getting three customers for the beta: SEFNCO, O’Reilly and Hooper Corporation with an estimate of five drivers for each group. That would have brought the total up to fifteen participants.

- methodology -

Early on, I met with the Coach team for the planning stage to get alignment on testing areas and goals. I created a Slack channel towards the end to keep in contact with all of the stakeholders involved in the beta process; this would help out with collaboration and any questions that arose for the preparation and live stages of the event. I collaborated heavily with the warehouse lead on logistics for sending over the testing hardware and set up for the onsite beta tests.

For the research side, I considered user interviews, but I felt like more methods were needed to gather information on usability and sentiment. The beta was a perfect event to utilize a mixed method approach with quantitative and qualitative methods. I decided to do the following:

- Usability Testing: I definitely wanted to learn more about the usability of the newer UI designs, so I ran this test before participants started their beta to get an idea about the ease of use on the new onboarding flows. These sessions were moderated and onsite where the user would set up the camera and app themselves in their own vehicles. The original estimated time for this was half an hour.

- Diary Study & User Interviews - This method seemed to be a great way to check on the use of our product over a longer period of time, around 3 weeks. For each group, I scheduled check in times for weekly phone call feedback sessions and also final interviews done over video with Microsoft Teams. The questions at the end focused more on the experience holistically than the day to day. I composed a list of questions to cover about their experience during the check in times- this also made it easier to analyze on the back end. I would also monitor analytics on the back end with the team to monitor the data coming in to check for accuracy

- SUS Questionnaire – In addition to the usability testing before the beta, I also wanted to learn what the users thought of the product holistically. I distributed a survey monkey link before beta and after the final interviews. The starting benchmark was 48.5; the goal was to get above a 68 which is an average rating.

I did run into a couple of problems during testing. For the first customer, SEFNCO we had issues with the on-site set up; participants had issues setting up the Test Flight app to run the beta app version and also login issues once they arrived at the app. Additionally, I had issues with the new GoPro cameras that were going to be used for filming. They didn’t work, so the team and I had to pivot to filming with our phones. The resulting video was not that great – very shaky, positioned at weird angles and ran long. (Unfortunately, since the cameras didn’t work, SEFNCO was the only group to have usability testing).

Two other issues I encountered were due to participant sourcing and beta planning with the PM.

The second customer only wanted to participate in the beta in a limited way. I didn’t know it at the time, but the company wanted to try out the new camera set up, but they didn’t want us to contact their drivers, citing they were too busy. The PM knew about this caveat, but still allowed the company to participate. This was a wasted opportunity because I wasn’t able to gather more participant data, only the back-end analytics on the data flow.

When it came to beta planning and scheduling, the PM was checked out early on. The Lead Designer and I tried a couple of different strategies to re-engage her back to the work. I introduced the ResearchOps templates designed for betas to aid in planning and to keep track of the tasks, hoping that the issue was organizationally related. That did not work. The Lead Designer and I both brought up the issue directly in meetings several times, which was met with vague promises on her end for when her tasks would be finished. Unfortunately, our efforts didn’t work. By the time it had gotten to two months before the beta, no activity had occurred which was troublesome since the PM is responsible for setting up and running this type of event. Since this was a high-profile beta, we had one last option to try. The Lead Designer brought up the issue to the Head of Product Management. She was concerned, and gave us leeway to start coordinating plans for the beta with stakeholders and customers. This proved to be a good move since the PM ended up leaving the company after the first customer finished their beta cycle.

- analysis -

Overall, a lot of data was generated from the research activities.

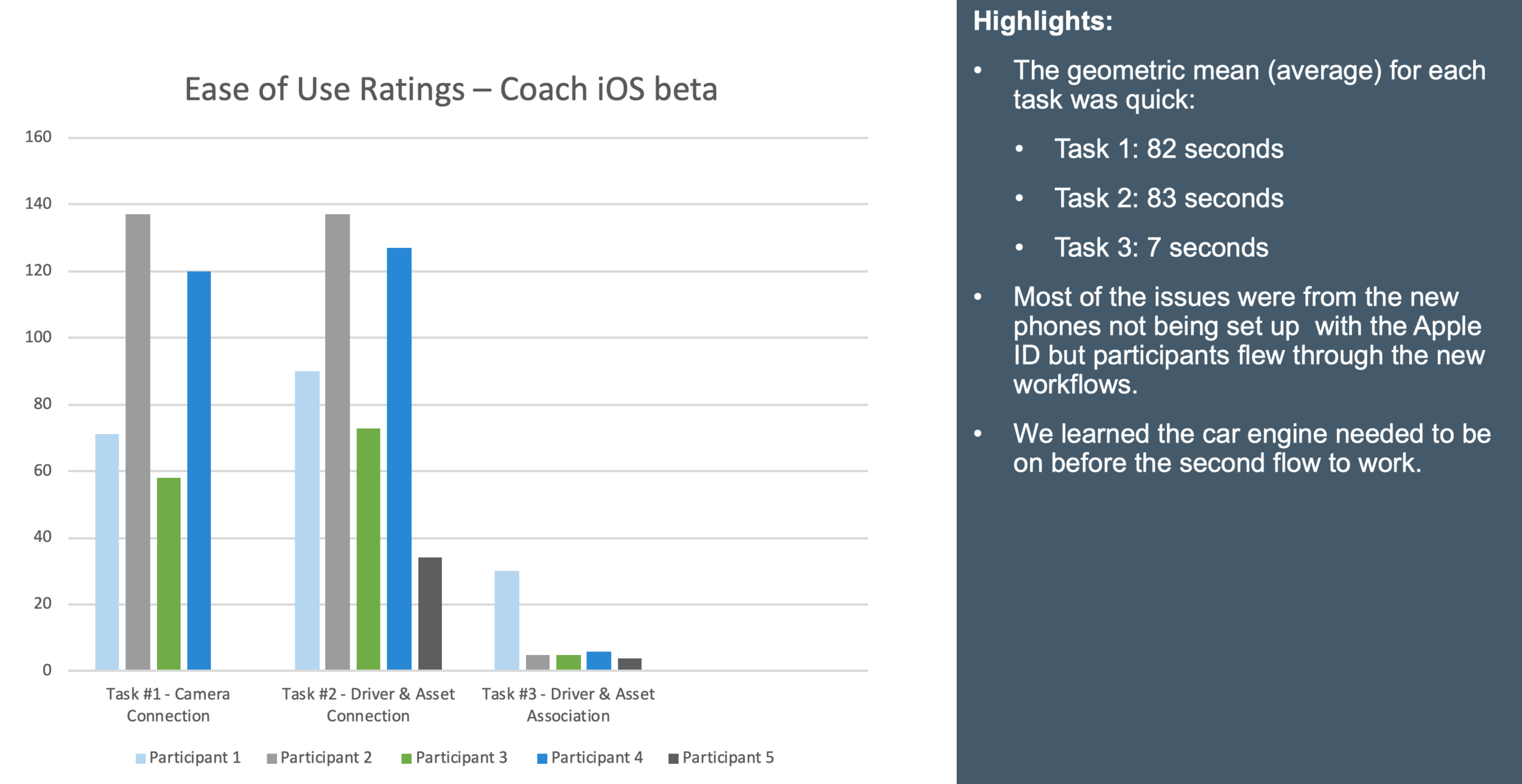

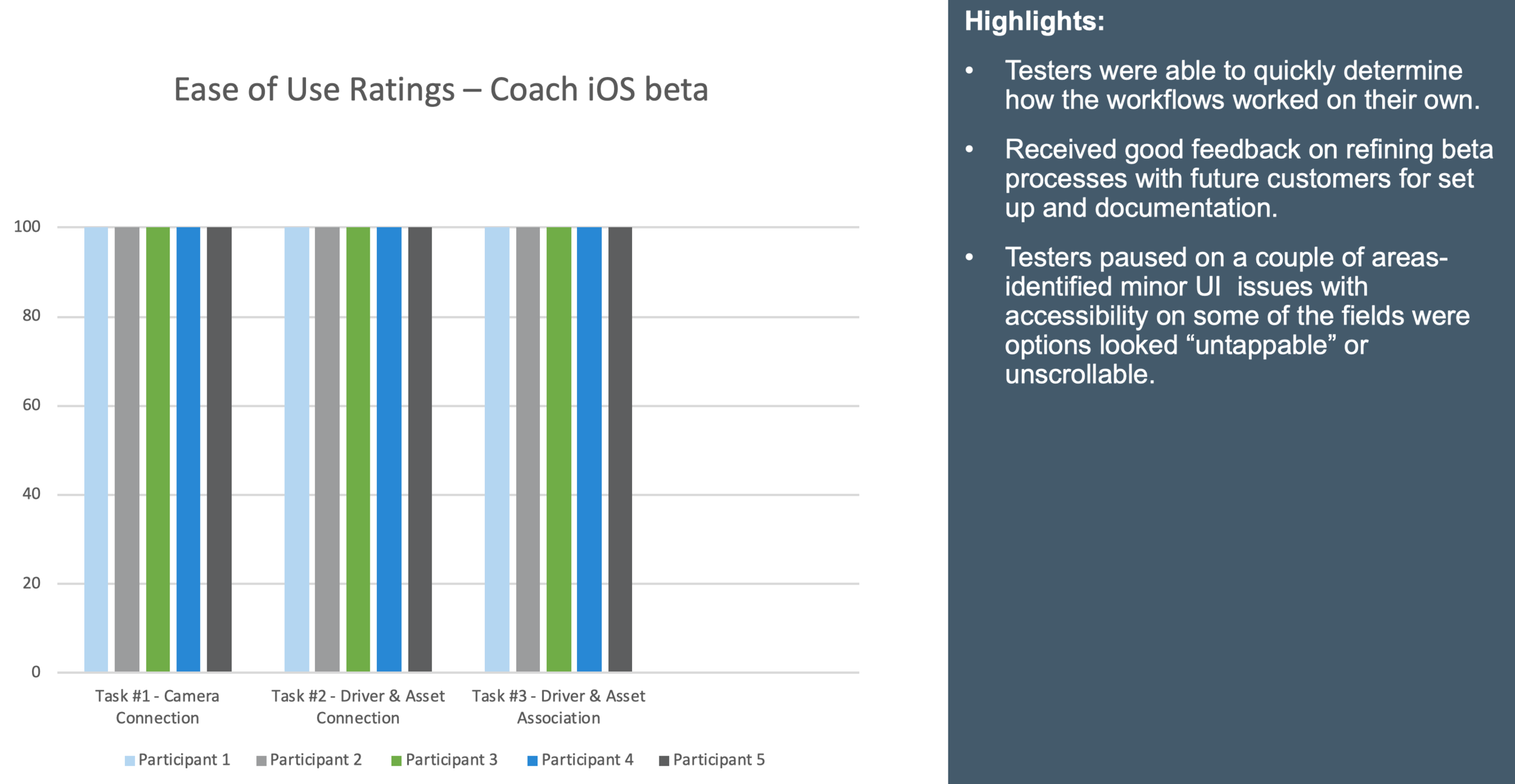

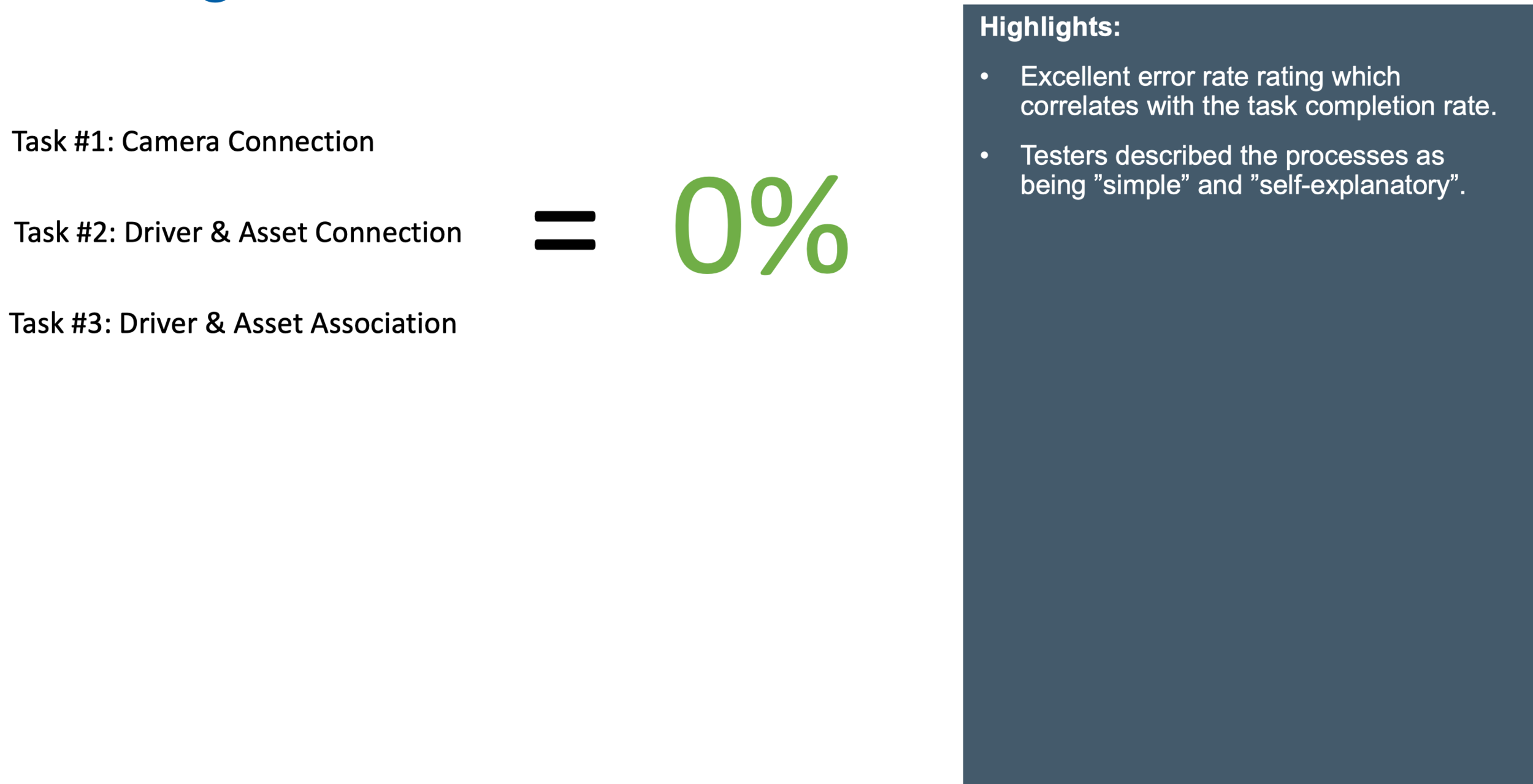

For the quantitative side, I calculated the usability testing metrics for; time on task, task completion rate and error rate. I also calculated the group average for the SUS scores. Since “perceived data inaccuracies” were an issue, I examined the number of times users complained versus what it said in the system for comparison with the development team.

For the qualitative side, I did affinity mapping to make sense of the data. I like doing affinity mapping because it gives you a zoomed-out view of the qualitative data and it’s easier to detect patterns. Microsoft Teams creates transcripts of the interviews. I gathered up all of the transcripts and started tagging different areas (issues, motivations, trends, goals, tasks, quotes, emotions, facts, needs) with different colors. Once I completed tagging, I grabbed all of the tags and copied them into a Miro board for affinity mapping. I first divided out a section for each question (from the weekly interviews and the final interviews) to see if any patterns were forming in the tags. I then began to see overarching themes develop and notated them at the top. From the themes I try to pull out the deeper context for insights and write out recommendations for solutions. I also created a video reel capturing user sentiment about the product and how it helps or hinders their work. After I completed analysis, I created two presentation decks of the report (the first one was an ask for just SEFNCO alone) and one at the end after all of the beta participants had completed the study. Additionally, I worked with the new PM and Lead Designer to create a third presentation deck to present the findings to the Seattle office during our Product Council meeting.

Affinity Mapping work

- Learnings -

Overall Findings

Surprises:

o Drivers had a more positive outlook on the cameras, even the interior ones. Could this attitude be a change in demographics?

o That we would have audio issues with the camera. It does make sense, most of the drivers are in bigger trucks that run on diesel so the interior is really loud. I can see why this would be a problem.

o Scoring system- did not make sense to the drivers or dispatch. Is a larger number good or bad?? Also, the dispatch side was worried about shifting through too much data for bigger fleets.

o I was also surprised, and disappointed to learn the viewpoint of some of the management for how they wanted to use our products – not as a tool or aid for driver improvement but as a mechanism for surveillance because they distrusted their drivers.

iNsights

Strategic Insight: High usability testing scores show promise for an intuitive, seamless ease of use of the new iOS version, which is good news for expansion into new upcoming markets.

Stakeholder Insight: Coach 2.0 is not ready for launch, more contextual data is a high priority and needed for the camera to provide a good user experience.

Product Insight:

High usability testing scores show promise for an intuitive, seamless ease of use of the new iOS version. Estimates for early and high adoption of the product.

Drivers were upset not with the presence of the interior cameras but with the perceived data inaccuracies. On the sentiment side, the large majority of them see it as a positive tool. Drivers are getting used to the interior cameras; we can promote this angle with customers.

impact

The direction of the product was changed- the launch was paused to fix the camera issues to improve accuracy. This prevented a disastrous release and the possible loss of five large clients.

- Next Steps & reflections -

Next Steps

The Coach team is working on these changes post beta based on the findings and insights:

o Improve the lack of Contextual data- Adding in more data streams to improve how the camera sees and interprets events.

o Change in model- More research will be done around an asset-based system vs. a driver one.

o Driver app volume control – How drivers can adjust the volume of the alerts Would this differ in different situations?

o Surfacing data- How to surface the most important data or insights to a dispatcher also reimagining the scoring system.

o Main bugs/ issues: Out of the eight issues that rolled up, eight of the major ones were resolved (all dealt with data in some form or fashion – data latency, WiFi disconnection, login issues, app to camera connection, UI contrast.

reflections

o Pre-beta set up & on-site filming issues: I realized that the SEFNCO team did not receive that much support for set up prior to the beta and that bad video footage from the usability tests was in part from a lack of guidelines and also the videographer forgetting the video while troubleshooting the preset-up issues. To solve these issues, I wrote up a pre-check list of set up items in the documentation deck that is reviewed with the customer before their beta begins. I also wrote up good practice guidelines for filming that was included in our beta set up template. These efforts greatly helped streamline the set up for the third customer, Hooper Corporation.

o Picky beta participants: This situation was frustrating and at the time I worried that I wouldn’t get enough participants. I realized that this also goes past my frustration- it is also wasting the company’s money if we don’t get the needed feedback from these events. To avoid this situation in the future, I helped the ResearchOps team set up new guidelines for companies who want to be future beta research participants. There are a couple of big expectations. One is that we (the researchers) need unfettered access to the participants for getting feedback during the event. We also need to be able to run a study in its entirety and not partially. Also, the managers need make sure their employees are onboard with participating as well.